Using Globus on Caviness

Overview

Moving personal data onto and off of the Caviness cluster using the Globus file transfer service uses a staging area on the /lustre/scratch filesystem. This approach is necessary when the Globus guest collection properties are enabled on a collection:

- When you authenticate to activate your Caviness collection, Globus generates a certificate that identifies that activation.

- All navigation and file transfer requests against your Caviness collection are authenticated by that certificate.

- When you share that collection, you are essentially giving your certificate to other Globus users so that they access your Caviness collection as you.

- Thus, any content to which you have access on your Caviness collection is also visible to the Globus users with whom you've shared access.

If we were to simply expose the entirety of /lustre/scratch on Globus, then when you enable sharing you could be providing access to much more than just your own files and directories. This would be a major data security issue, so we instead present a specific sub-directory to Globus: /lustre/scratch/globus/home.

A user requesting Globus-accessible storage is provided a directory (named with the uid number, e.g. 1001) which is his/her staging point:

Getting files off Caviness

- Make files already on

/lustre/scratchvisible on your Globus endpoint by moving them to/lustre/scratch/globus/home/<uid#>(usingmvis very fast; duplicating the files usingcpwill take longer). - Copy files from home or workgroup storage to

/lustre/scratch/globus/home/<uid#>(usingrsyncorcp) to make them visible on your Globus endpoint. - Please note: do not create symbolic links in

/lustre/scratch/globus/home/<uid#>to files or directories that reside in your home or workgroup storage. They will not work on the Globus endpoint.

Moving data to Caviness

- The

/lustre/scratch/globus/home/<uid#>directory is writable by the Globus, so data can be copied from remote collection to your Caviness collection. - Files copied to

/lustre/scratch/globus/home/<uid#>via Globus can be moved elsewhere on/lustre/scratch(again,mvis very fast and duplication usingcpwill be slower) or copied to home and workgroup storage.

PLEASE BE AWARE that all data moved to Caviness will be consuming storage space on the shared scratch filesystem, /lustre/scratch. We have currently not enacted any quota limits on that filesystem, so it is left up to the users to refrain from filling it to capacity. Since Globus is often used to transfer large datasets, we ask that you be very careful to NOT push the filesystem to capacity.

Should capacity be neared, IT may request that you remove data on /lustre/scratch — including content present in your Globus staging directory.

PLEASE REFRAIN from modifying the group ownership and permissions on /lustre/scratch/globus/home/<uid#>. Group ownership is set to the "everyone" group and permissions as 0700 (drwx——) to properly secure your data. Any changes to ownership/permissions on the directory could expose your data to other users on the Globus service.

Request a Globus home staging point

Only available to UD accounts (UDelNet ID) not Guest accounts (hpcguest«uid»).

Please submit a Research Computing High Performance Computing (HPC) Clusters Help Request and and click on the green Request Service button, complete the form and in the problem details indicate you are requesting a Globus home staging point on Caviness.

Where is my Globus home?

You can find your uid number using the id command:

$ id -u 1001

This user's Globus home directory would be /lustre/scratch/globus/home/1001. Users can append the following to the ~/.bash_profile file:

export GLOBUS_HOME="/lustre/scratch/globus/home/$(id -u)"

On login, the GLOBUS_HOME environment variable will point to your Globus home directory:

$ echo $GLOBUS_HOME /lustre/scratch/globus/home/1001 $ ls -l $GLOBUS_HOME total 100715 -rw-r--r-- 1 frey everyone 104857600 Jul 30 15:27 borkbork drwxr-xr-x 2 frey everyone 95744 Aug 20 15:25 this_is_a_test -rw-r--r-- 1 frey everyone 104857600 Jul 30 15:27 zeroes

Activating your collection

Start by navigating to the Globus web application (http://app.globus.org/) in your web browser. If you have previously logged-in, you will be taken directly to the dashboard. Otherwise:

- Choose the University of Delaware as your organization and click the Continue button; on the next page enter your UDelNet Id and Password and continue on to the dashboard.

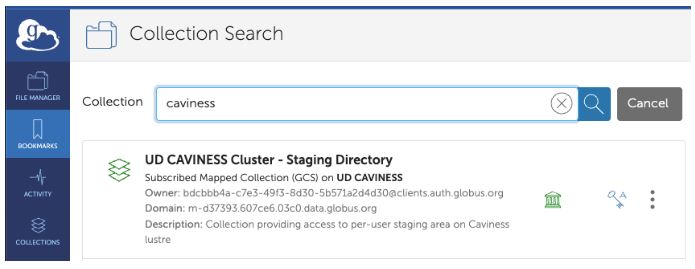

Click the “File Manager” button  on the left side-panel. In the search box at the top of the page, enter "Caviness" and click the magnifying glass icon:

on the left side-panel. In the search box at the top of the page, enter "Caviness" and click the magnifying glass icon:

The "UD CAVINESS Cluster - Staging Directory" collection should be visible. Click on the collection; you will be asked to authenticate using your UDelNet Id and Password if it is the first time you access the collection.

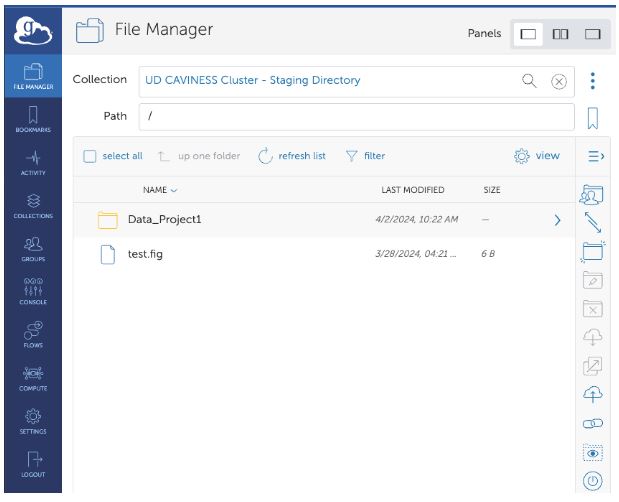

If successful (and you have a Globus home directory) the list of files in /lustre/scratch/globus/home/<uid#> should appear:

Guest collections

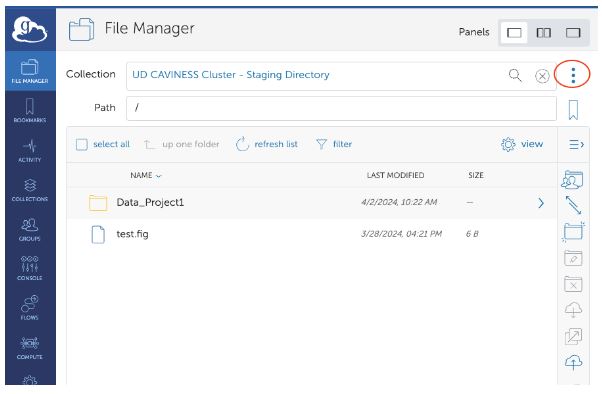

To share your files with others via Globus, a user can create a Guest Collection, which enables sharing a directory in a user’s Globus Home directory. To create a Guest Collection, you need to first go to the collection details. You can either click the three dots next to the collection:

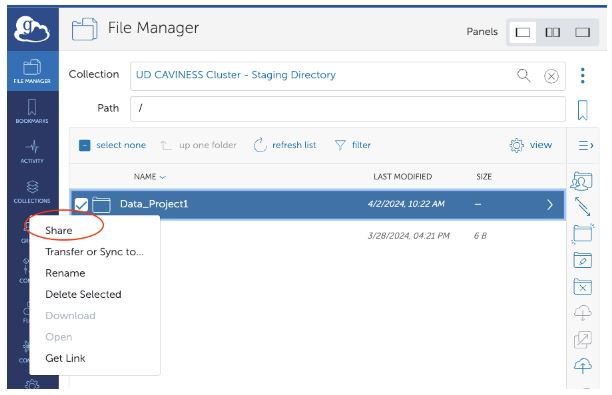

Or right click on the directory you would like to share and select “Share”

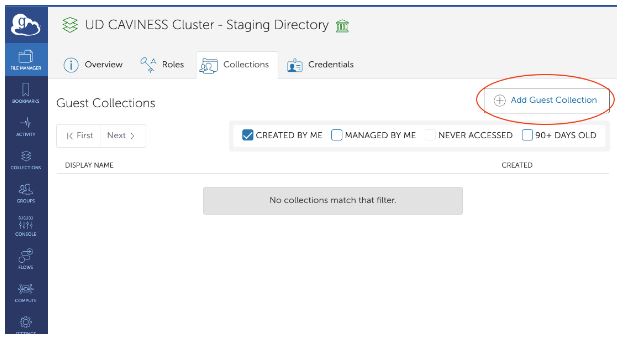

Click “Add Guest Collection” in the “Collections” tab

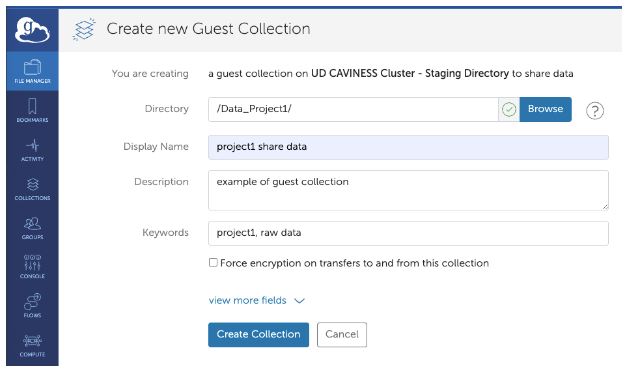

Make sure you at least set the “Directory” and “Display Name” properly. It’s a good idea to fill in the other fields as well:

Once the guest collection is created, you may want to set the permissions of this guest collection, e.g. sharing with specific individuals, making it public, allowing read permissions only, etc.

Click the "Create Collection" button. If successful, the following screen will show "Shared with" listing just you. Click the "Add Permissions — Share With" button to add (and notify) additional Globus users.

PLEASE NOTE: While we are testing this new service, please do not make public (accessible by any Globus user) shares. This is especially true for writable shares — otherwise you could easily have an arbitrary Globus user upload enough data to fill /lustre/scratch to capacity and adversely impact all Caviness users.

Examples

Data Upload Repository

In this example, a directory is created under the $GLOBUS_HOME that will hold a dataset comprised of many small (less than 4 GB) files. Lustre will by default assign the files to unique Object Storage Targets (OSTs) as they are created, effectively spreading them across the Lustre storage pools:

$ mkdir -p $GLOBUS_HOME/incoming/20200103T1535

A series of transfers are scheduled in the Globus web interface, placing the files in this directory.

After the files have been uploaded, the user moves them from this staging directory to a shared location his workgroup will be able to access:

$ mkdir -p /lustre/scratch/it_nss/datasets $ mv $GLOBUS_HOME/incoming/20200103T1535 /lustre/scratch/it_nss/datasets

Since the source and destination paths are both on the /lustre/scratch filesystem, the mv is nearly instantaneous.

Moving to Workgroup Storage

If the final destination of the incoming dataset is the workgroup storage (e.g. a path under $WORKDIR), the cp or rsync commands should be used. The rsync command is especially useful if you are updating a previously-copied dataset with new data:

$ rsync -ar $GLOBUS_HOME/incoming/20200103T1535/ $WORKDIR/datasets/2019-2020/

The trailing slashes ("/") on the source and destination directories are significant; do not forget them. Any files present in both source and destination that have not been modified will be skipped, and any files in the source directory that are not present in the destination directory will be fully copied to the destination directory. Files present in the destination directory that are not present in the source directory will NOT be removed (add the --delete option to remove them).

See the man page for rsync for additional options.

Data Upload Repository (Large Files)

In the previous example the dataset consisted of many small (4 GB or less) files. For very large files, performance can be increased on Lustre by splitting the file itself across multiple OSTs: the first 1 GB goes to OST 1, the next 1 GB to OST 2, etc. Configuring file striping happens at the directory level, with all files created inside that directory inheriting the parent directory's striping behavior.

The default striping on /lustre/scratch uses a single randomly-chosen OST for all of the file's content. In this example, we will create a directory whose files will stripe across two OSTs in 1 GB chunks:

$ cd $GLOBUS_HOME/incoming $ mkdir 20200103T1552-2x1G $ lfs setstripe --stripe-count 2 --stripe-size 1G 20200103T1552-2x1G $ ls -ld 20200103T1552-2x1G drwxr-xr-x 2 frey everyone 95744 Jan 3 15:55 20200103T1552-2x1G $ lfs getstripe 20200103T1552-2x1G 20200103T1552-2x1G stripe_count: 2 stripe_size: 1073741824 stripe_offset: -1

The choice of 1 GB was somewhat arbitrary; in reality, the stripe size is often chosen to reflect an inherent record size associated with the file format.

Globus is used to transfer a data file to this directory. To see how the file was broken across OSTs the lfs getstripe command can be used:

$ cd $GLOBUS_HOME/incoming/20200103T1552-2x1G $ ls -l total 38 -rw-r--r-- 1 frey everyone 10485760000 Jan 3 15:57 db-20191231.sqlite3db $ lfs getstripe db-20191231.sqlite3db db-20191231.sqlite3db lmm_stripe_count: 2 lmm_stripe_size: 1073741824 lmm_pattern: 1 lmm_layout_gen: 0 lmm_stripe_offset: 2 obdidx objid objid group 2 611098866 0x246ca0f2 0 3 607328154 0x2433179a 0

When this file (or directory) is moved to another directory on /lustre/scratch, the striping is retained:

$ mv $GLOBUS_HOME/incoming/20200103T1552-2x1G/db-20191231.sqlite3db \ > /lustre/scratch/it_nss/datasets $ lfs getstripe /lustre/scratch/it_nss/datasets/db-20191231.sqlite3db /lustre/scratch/it_nss/datasets/db-20191231.sqlite3db lmm_stripe_count: 2 lmm_stripe_size: 1073741824 lmm_pattern: 1 lmm_layout_gen: 0 lmm_stripe_offset: 2 obdidx objid objid group 2 611098866 0x246ca0f2 0 3 607328154 0x2433179a 0