All About Lustre

The filesystems with which most users are familiar store data in discrete chunks called blocks on a single hard disk. Blocks have a fixed size (for example, 4 KiB) so a 1 MiB file will be split into 256 blocks (256 blocks x 4 KiB/block = 1024 KiB). Performance of this kind of filesystem depends on the speed at which blocks can be read from and written to the hard disk — (input/output, or i/o). Different kinds of disk yield differing i/o performance: a solid-state disk (SSD) will move blocks back and forth faster than a basic SATA hard disk used in a desktop computer.

Buying a better (and more expensive) disk is one way to improve i/o performance, but once the fastest, most expensive disk has been purchased this path leaves no room for further improvement. The demands of an HPC cluster with several hundred (maybe even thousands) of compute nodes quickly outpaces the speed at which a single disk can shuttle bytes back and forth. Parallelism saves the day: store the filesystem blocks on more than one disk and the i/o performance of each will sum (to a degree). For example, consider a computer that can move data to its hard disks in 1 cycle with a hard disk that requires 4 cycles to write a block. Storing four blocks to just one hard disk would require 20 cycles: 1 cycle to move the block to the disk and 4 cycles to write it, with each block waiting on the completion of the previous:

With four disks being used in parallel (example (b) above), the block writing overlaps and takes just 8 cycles to complete.

Parallel use of multiple disks is the key behind many higher-performance disk technologies. Caviness makes extensive use of ZFS storage pools, which allow multiple physical disks to be used as a single unit with parallel i/o benefits. Parity data is constructed when data is written to the pool such that the loss of a hard disk can be sustained: when a disk fails a new disk is substituted and the parity data yields the missing data on that disk. ZFS offers different levels of data redundancy, from simple mirroring of data on two disks to triple-parity that can tolerate the failure of three disks. ZFS double-parity (raidz2) pools form the basis for the Lustre file system in Caviness.

A Storage Node

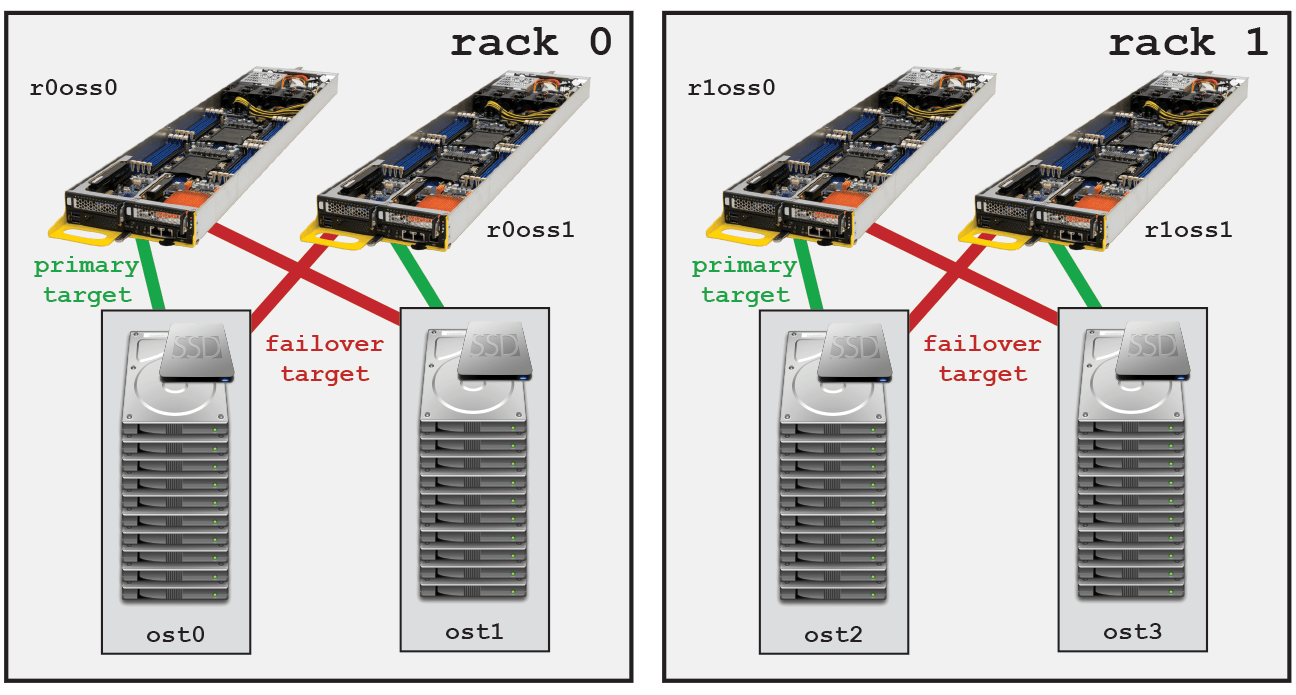

The Caviness cluster contains multiple Object Storage Targets (OSTs) in each rack that each contain many hard disks. For example, ost0 contains 10 SATA hard disks (8 TB each, 1 hot spare) managed as a ZFS storage pool with an SSD acting as a read cache for improved performance:

Each OST can tolerate the concurrent failure of one or two hard disks at the expense of storage space: the raw capacity of ost0 is 72 TB, but the data resilience afforded by raidz2 costs around 22% capacity (leaving 56 TB usable). The OST is managed by an Object Storage Server (OSS) visible to all login, management, and compute nodes on the cluster network. Nodes funnel their i/o requests to the OSS, which in turn produces i/o requests to the OST in question. Each OSS in Caviness has primary responsibility for one OST, but can also handle i/o for its partner OSS's OST. The figure above shows that r00oss0 is primary for ost0 and on failure of r00oss1 can handle i/o for ost1.

lfs check servers command.

The Lustre Filesystem

Each OST increases i/o performance by simultaneously moving data blocks to the hard disks of a raidz2 pool. Each OSS services its OST, accepting and interleaving many i/o workloads. Having multiple OSSs and OSTs adds yet another level of parallelism to the scheme. The agglomeration of multiple OSS nodes, each servicing an OST, is the basis of a Lustre filesystem1). The figure above shows that each rack in Caviness contains OSSs and OSTs: Caviness is designed to grow its Lustre file system with each additional rack that is added, increasing capacity and performance of this resource.

The benefits of a Lustre filesystem should be readily apparent from the discussion above:

- parallelism is leveraged at multiple levels to increase i/o performance

- raidz2 pools provide resilience

- file system capacity and performance is not limited by hard disk size

Further Performance Boost: Striping

Normally on a Lustre filesystem each file resides in toto on a single OST. In Lustre terminology, a file maps to a single object, and an object is a variable-size chunk of data which resides on an OST. When a program works with a file, it must direct all of its i/o requests to a single OST (and thus a single OSS).

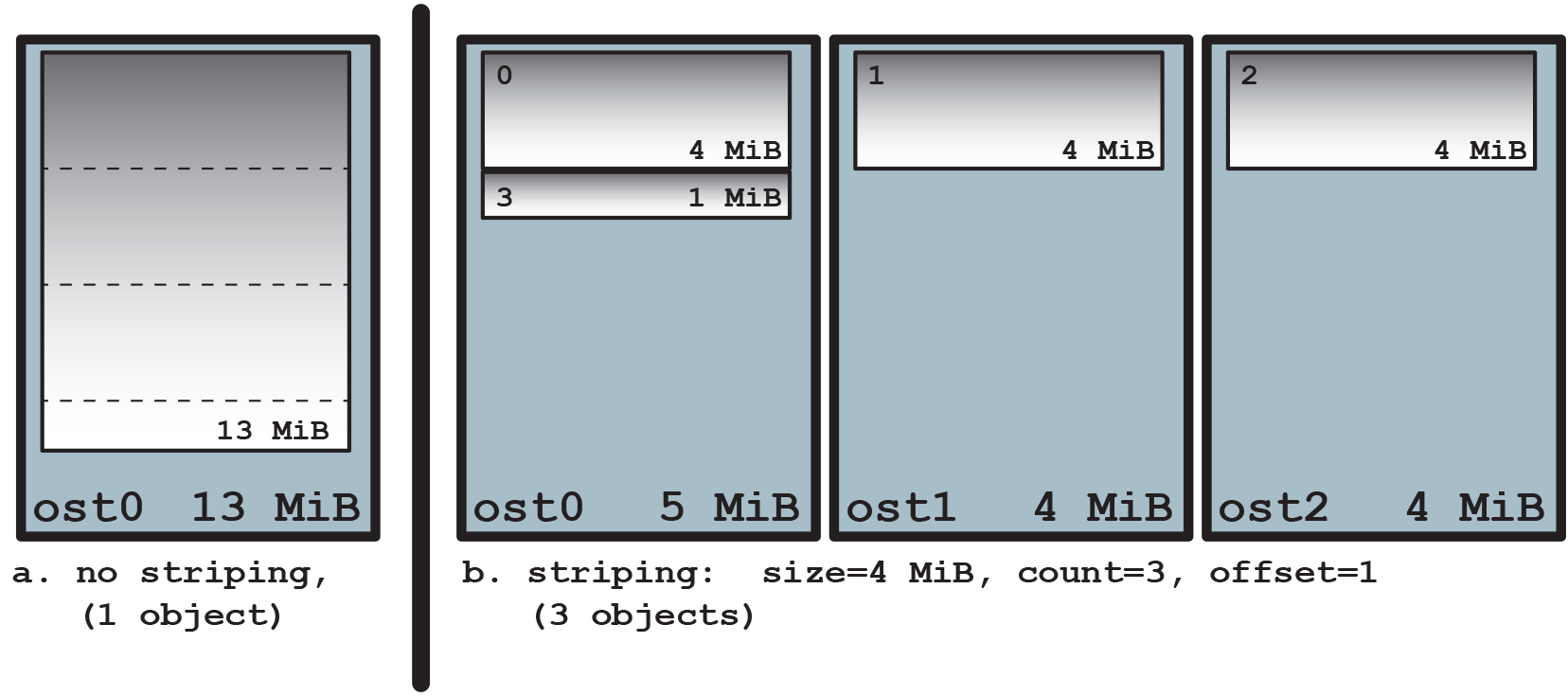

For large files or files that are internally organized as "records2)" i/o performance can be further improved by striping the file across multiple OSTs. Striping divides a file into a set of sequential, fixed-size chunks. The stripes are distributed round-robin to N unique Lustre objects – and thus on N unique OSTs. For example, consider a 13 MiB file:

Without striping, all 13 MiB of the file resides in a single object on ost0 (see (a) above). All i/o with respect to this file is handled by oss0; appending 5 MiB to the file will grow the object to 18 MiB.

With a stripe count of three and size of 4 MiB, the Lustre filesystem pre-allocates three objects on unique OSTs on behalf of the file (see (b) above). The file is split into sequential segments of 4 MiB – a stripe – and the stripes are written round-robin to the objects allocated to the file. In this case, appending 5 MiB to the file will see stripe 3 extended to a full 4 MiB and a new stripe of 2 MiB added to the object on ost1. For large files and record-style files, striping introduces another level of parallelism that can dramatically increase the performance of programs that access them.

lfs setstripe command to pre-allocate the objects for a striped file: lfs setstripe -c 4 -S 8m my_new_file.nc would create the file my_new_file.nc containing zero bytes with a stripe size (-s) of 8 MiB and striped across four objects (-c).

lfs setstripe and copying the contents of the old file into it effectively changes the data's striping pattern.