Getting started on Farber

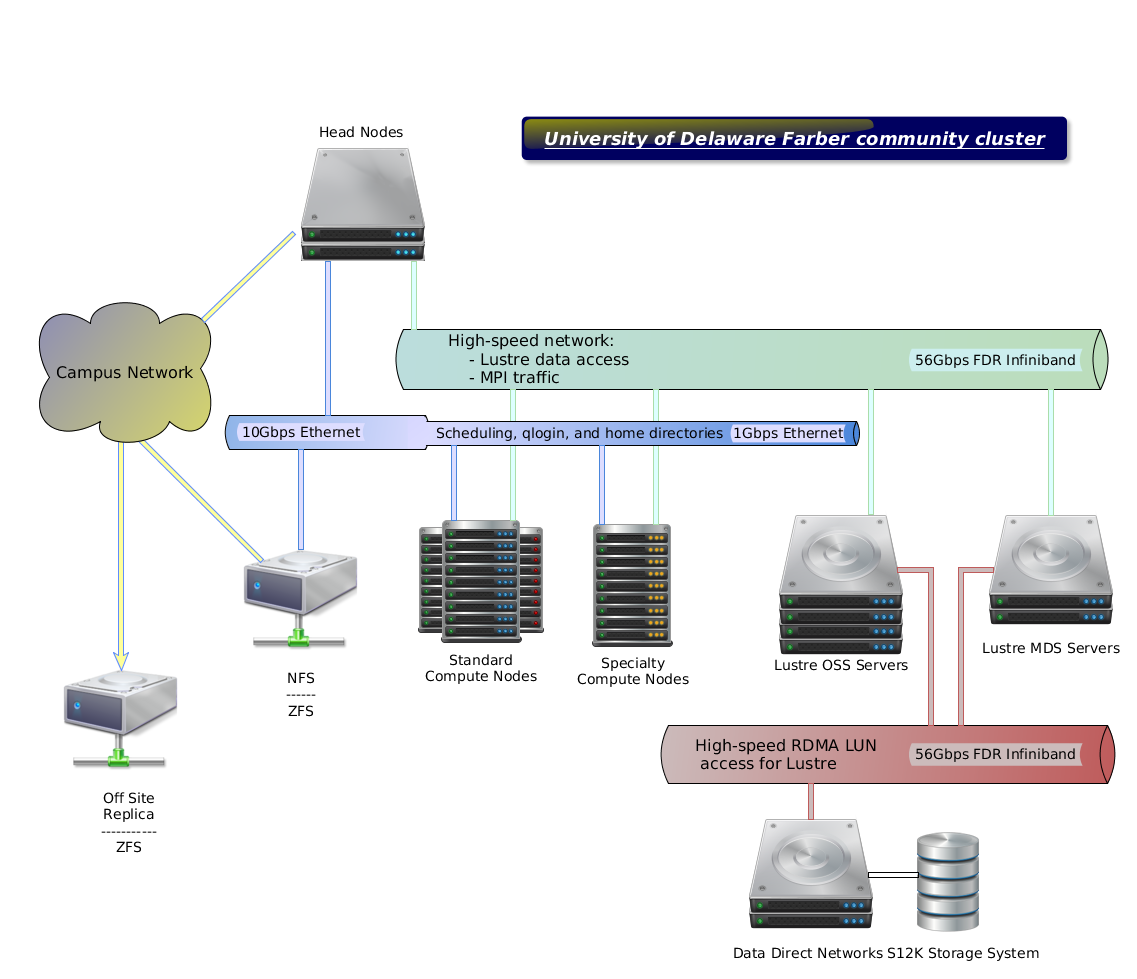

The Farber cluster, UD's second Community Cluster, was deployed in 2014 and is a distributed-memory, Linux cluster. It consists of 100 compute nodes (2000 cores, 6.4 TB memory). The nodes are built of Intel “Ivy Bridge” 10-core processors in a dual-socket configurations for 20-cores per node. An FDR InfiniBand network fabric supports the Lustre filesystem (approx 256 TiB of usable space). Gigabit and 10-Gigabit Ethernet networks provide access to additional filesystems and the campus network. The cluster was purchased with a proposed 4 year life, putting its retirement in the July 2018 to September 2018 time period.

The cluster has been named in honor of David Farber, UD professor and Distinguished Policy Fellow in the Department of Electrical and Computer Engineering. Farber is one of the pioneers who helped develop the U.S. Department of Defense’s ARPANET into the modern Internet. His work on CSNET, a network linking computer science departments across the globe, was a key step between the ARPANET and today’s Internet. Today, Farber’s work focuses on the translation of technology and economics into policy, the impact of multi-terabit communications, and new computer architecture innovations on future Internet protocols and architectures. He was named to the Internet Society’s board of trustees in 2012.

For general information about the community cluster program, visit the IT Research Computing website. To cite the Farber cluster for grants, proposals and publications, use these HPC templates.

Update Starting in July 2023, IT RCI will begin dismantling the Farber cluster. Compute nodes, extraneous network equipment, and the racks containing it will be removed from the Data Center. The Farber head node will remain online to provide ongoing access to the home, workgroup, and /lustre file systems[1] for a period yet to be determined.

Home and Workgroup File System Details Starting on the same date, home and workgroup file systems' daily snapshotting will be suspended. All prior snapshots will remain present. This will allow users to rearrange, archive, and delete files in those directories: the final snapshot will continue to be present as a read-only backup of the content prior to cleanup activities.

[1] In our original email regarding the shutdown of Farber we stated that /lustre would not be available after July 2023. Given the amount of data present on /lustre, we have reconsidered that policy.

Login (head) node is online to allow file transfer off Farber's filesystems (home, /home/work and /lustre/scratch directories).

Configuration

Overview

An HPC system always has a public-facing system known as the login node or head node. The login node is supplemented by many compute nodes which are connected by a private network. Each compute node typically has several multi-core processors that share memory. Finally, the login nodes and the compute nodes share one or more filesystems over a high-speed network.

Login node

The login node is the primary node shared by all cluster users. Its computing environment is a full standard variant of UNIX configured for scientific applications. This includes command documentation (man pages), scripting tools, compiler suites, debugging/profiling tools, and application software. In addition, the login node has several tools to help you move files between the HPC filesystem and your local machine, other clusters, and web-based services.

If your work requires highly interactive graphics and animations, these are best done on your local workstation rather than on the cluster. Use the cluster to generate files containing the graphics information, and download them from the HPC system to your local system for visualization.

Compute nodes

There are many compute nodes with different configurations. Each node may have extra memory, multi-core processors (CPUs), GPUs and/or extra local disk space. They may have different OS versions or OS configurations, such as mounted network disks. This document assumes all the compute nodes have the same OS and almost the same configuration. Some nodes may have more cores, more memory or more disk.

The standard UNIX on the compute nodes is configured to support just the running of your jobs, particularly parallel jobs. For example, there are no man pages on the compute nodes. Large components of the OS, such as X11, are only added to the environment when needed.

All the multi-core CPUs and GGPs share the same memory in what may be a complicated manner. To add more processing capability while keeping hardware expense and power requirement down, most architectures use Non-Uniform Memory Access (NUMA). Also the processors may be sharing hardware, such as the FPUs (Floating point units).

Commercial applications, and normally your program, will use a layer of abstraction called a programming model. Consult the cluster specific documentation for advanced techniques to take advantage of the low level architecture.

Storage

Permanent filesystems

At UD, permanent filesystems are those that are backed up or replicated at an off-site disaster recovery facility. This always includes the home filesystem, which contains each user's home directory and has a modest per-user quota. A cluster may also have a larger permanent filesystem used for research group projects. The system is designed to let you recover older versions of files through a self-service process.

High-performance filesystems

One important component of HPC designs is to provide fast access to large files and to many small files. These days, high-performance filesystems have capacities ranging from hundreds of terabytes to petabytes. They are designed to use parallel I/O techniques to reduce file-access time. The Lustre filesystems, in use at UD, are composed of many physical disks using technologies such as RAID-6 to give resilience, data integrity, and parallelism at multiple levels. They use high-bandwidth interconnects such as InfiniBand and 10-Gigabit Ethernet.

High-performance filesystems, such as Lustre, are typically designed as volatile, scratch storage systems. This is because traversing the entire filesystem takes so much time that it becomes financially infeasible to create off-site backups. However, the RAID-6 design provides increased user-confidence by providing a high level of built-in redundancy against hardware failure.

Local filesystems

Each node typically has an internal, locally connected disk that is fast, but which does not use a parallel filesystem. Its capacity may be measured in terabytes. Part of the local disk is used for system tasks such memory management tasks which might include cache memory and virtual memory. This remainder of the disk is ideal for applications that need large scratch storage for the duration of a run. That portion is referred to as the node scratch filesystem.

Each node scratch filesystem disk is only accessible by the node in which it is physically installed. Often, a job scheduling system, such as Grid Engine, creates a temporary directory associated with your job on this filesystem. When your job terminates normally, the job scheduler automatically erases that directory and its contents.

Software

IT-managed software: A list of installed software that IT builds and maintains for Farber users.

Documentation for all software is organized in alphabetical order on the sidebar.

Review the nVidia'a GPU-Accelerated Applications list for applications optimized to work with GPUs. These applications would be able to take advantage of nodes equipped with nVidia Tesla K20X coprocessors.

Help

System or account problems, or can't find an answer on this wiki

If you are experiencing a system related problem, first check Farber cluster monitoring (off-campus access requires UD VPN). To report a new problem, or you just can't find the help you need on this wiki, then submit a Research Computing High Performance Computing (HPC) Clusters Help Request and complete the form including Farber and your problem details in the description field.

Ask or tell the HPC community

hpc-ask is a Google group established to stimulate interactions within UD’s broader HPC community and is based on members helping members. This is a great venue to post a question about HPC, start a discussion, or share an upcoming event with the community. Anyone may request membership. Messages are sent as a daily summary to all group members. This list is archived, public, and searchable by anyone.

Publication and Grant Writing Resources

HPC templates are available to use for a proposal or publication to acknowledge use of or describe UD’s Information Technologies HPC resources.