--exclusive flag.Table of Contents

Using Caviness or DARWIN as a Remote VisIt 3.2.x Host

The VisIt data visualization software can be used to post-process and interact with myriad kinds of data. One useful feature is the software's ability to run a local graphical user interface on the user's laptop or desktop which connects to data-access and computational engines running on another host. When that other host is a cluster – like Caviness or DARWIN – this paradigm gives VisIt:

- the ability to open files resident on the cluster without having to copy them to the laptop of desktop

- the ability to leverage cluster compute resources in post-processing of the data

Remote connections are described using host profiles in VisIt.

This recipe uses VisIt 3.2.1 which is present on both Caviness and DARWIN as VALET package visit/3.2.1. The package has been built with all optional modules and Open MPI parallelism. The local copy of VisIt on a user's laptop or desktop should likewise be of the 3.2 lineage for best compatibility. See VisIT Releases to download the appropriate version.

Host Profiles

IT RCI staff have authored host profiles for users of the Caviness and DARWIN clusters. The XML files can be found on each cluster in the remote/hosts subdirectory of the VisIt version's installation. For 3.2.1, the path is /opt/shared/visit/3.2.1/remote/hosts. Users can download these XML files and copy them to a path on their laptop or desktop where their local copy of VisIt 3.2.1 will see them (see section 13.2.1.4 on this page).

A few details on the implementation of remote access and engines follows.

Installation Directory

One important detail behind the Caviness and DARWIN host profiles is the "Path to visit installation" string. The expectation is for this to be the directory in which VisIt was installed. This works fine if that copy of VisIt only uses bundled and OS-provided libraries; however, if the copy depends on other libraries – a VALET-managed version of Open MPI, for instance – the direct execution of the visit program will fail. Thus, it's necessary to create a wrapper script on the cluster that performs the appropriate environment setup (e.g. using vpkg_require) and executes the desired visit command. This wrapper is located at /opt/shared/visit/<version>/remote/bin/visit and basically removes the remote directory from the command name (and all recognizably path-oriented arguments) before re-executing the /opt/shared/visit/<version>/bin/visit command with the same arguments. The script also needs to handle transitioning computational engine startups to the appropriate workgroup (via the VisIt "bank" option, -b <workgroup-name>) so that Slurm accepts the job submission.

Using the correct path to the remote wrapper script is critical to the proper usage of Caviness and DARWIN as remote engines for VisIt. The host profiles published on Caviness and DARWIN for each version of VisIt are guaranteed to contain the appropriate path.

Launch Profiles

For each host profile, a series of launch profiles are present that direct how a remote computational engine is executed by the user's laptop or desktop. Properties like

- number of nodes

- number of processors (tasks)

- number of threads (cpus per task)

- partition/queue for job

- "bank" account (workgroup)

- commands used to submit/execute the engine (sbatch/mpirun)

- additional flags to sbatch or mpirun

are present and critically affect how (and if!) the remote engine will function. IT RCI performed extensive testing to produce an optimal set of flags (in conjunction with the "remote" visit startup script) for users of the Caviness and DARWIN clusters.

The host profiles published on Caviness and DARWIN for each version of VisIt contain several distinct launch profiles:

- A serial profile: the engine executes with a single CPU core and 4 GiB of memory

- A coarse-grained parallel profile: the engine executes 4 workers on 4 CPU cores and 16 GiB of memory

- A hybrid parallel profile: the engine executes 4 workers on 16 CPU cores (4 threads per worker) and 16 GiB of memory

- A full-node parallel profile: the engine executes as many workers as possible (given the cluster specs) with 4 threads per worker and all of the memory in the node1)

The Caviness launch profiles are written to submit jobs to the workgroup's owned partition (_workgroup_). The DARWIN launch profile are written to submit jobs to the idle partition. The user is responsible for altering these fields in the host profiles if an alternate partition is desired (e.g. by duplicating an existing launch profile and altering it).

If the user is a member of a single workgroup on Caviness or DARWIN and wants to avoid having to enter a "bank" account in the engine selection dialog on every use, the locally-installed host profiles can be edited to replace the value "WORKGROUP" with the user's desired workgroup name.

The existing launch profiles should be adequate for an advanced user to infer how to author additional launch profiles that spread the remote engine's MPI execution across multiple nodes/cores.

Using Remote Access

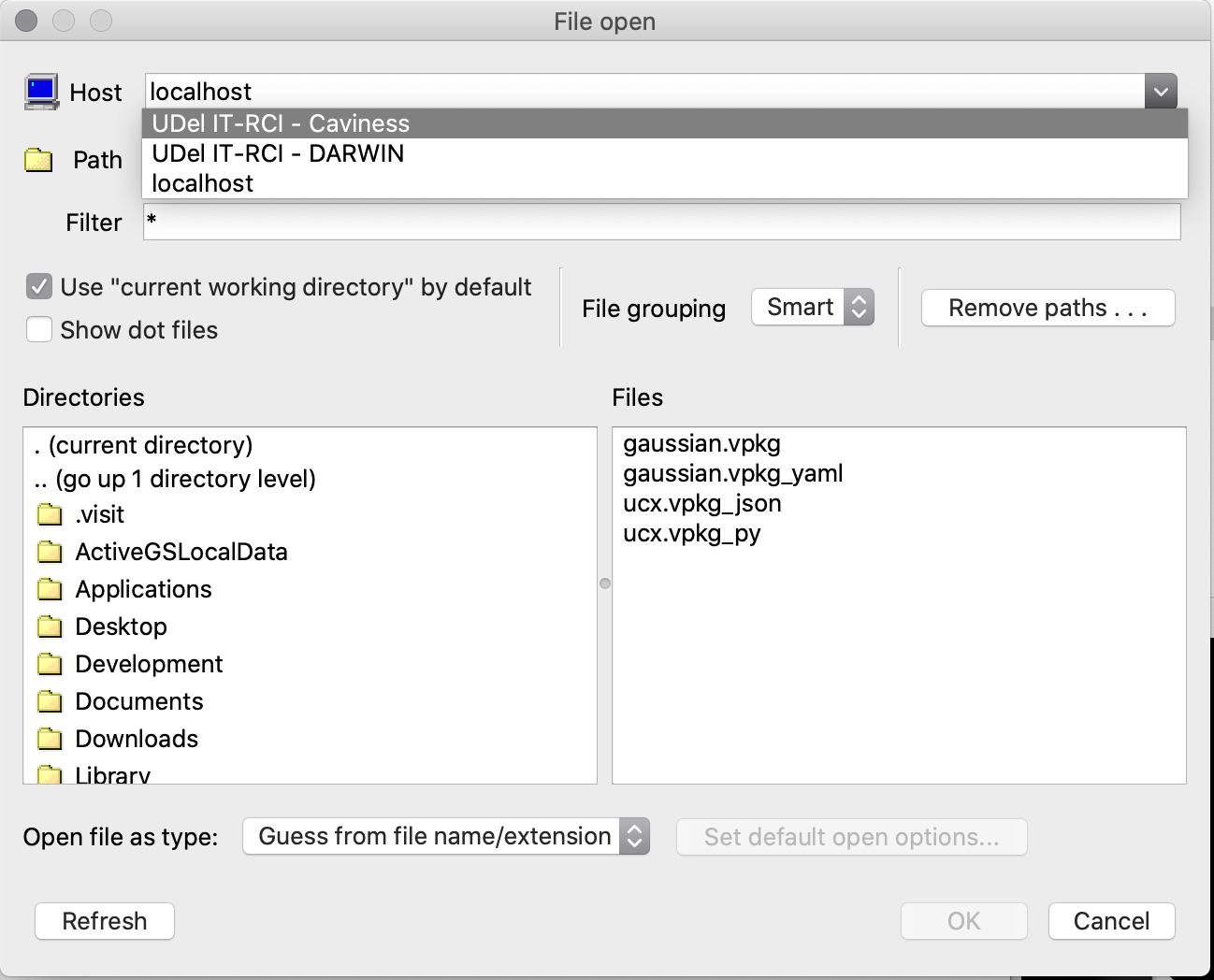

With the host profiles properly installed, the next time VisIt is started on the user's laptop or desktop a remote session can be created via the file open dialog:

Selecting the "UDel IT-RCI - Caviness" option from the host menu should produce a file listing of the user's home directory. The user can navigate to the appropriate directory on the remote system and select the file to open.

Behind the scenes, the VisIt on the laptop or desktop is opening an SSH connection to the cluster login node and tunneling a number of local network ports it is using to the login node. This tunneling forms the connection between the local VisIt and the remote metadata server that gets launched in the SSH session. Note that disruption of the SSH connection will disconnect the local VisIt from the remote metadata server (and compute engine).

The metadata server will execute on the cluster login node; it is not expected to produce significant computational load.

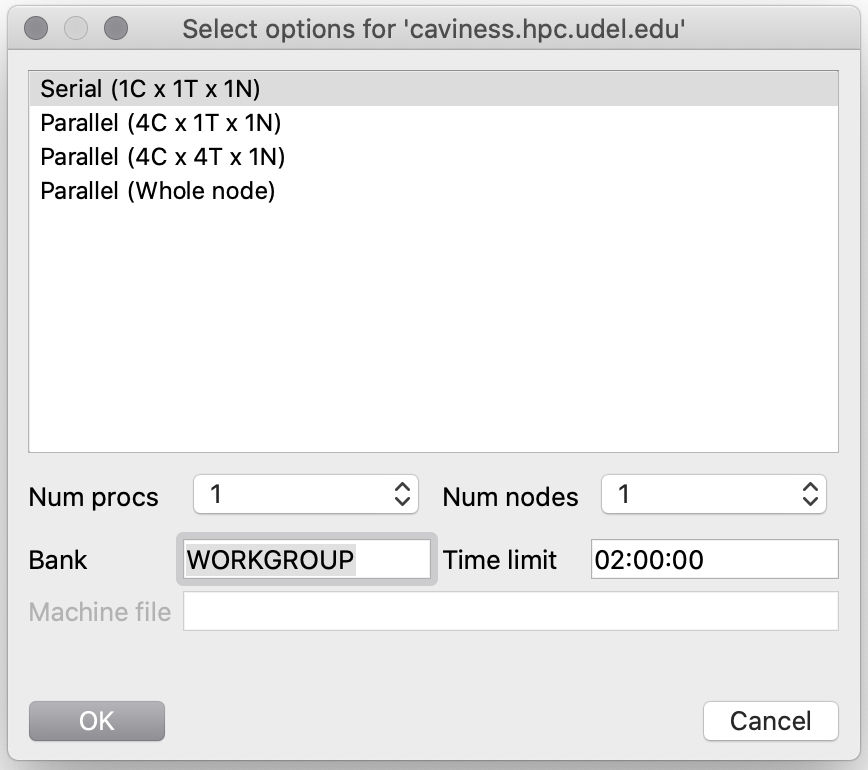

Once the remote file has been opened, the local VisIt will prompt the user to choose a remote computation engine via a launch profile (as declared in the host profile that was installed):

The field labelled "Bank" corresponds to the Caviness or DARWIN workgroup the user would like to use to execute the remote engine: this field MUST be filled-in by the user before clicking the "OK" button to continue. The user can also alter the maximum wall time for the remote engine by editing the "Time limit" field.

After choosing a launch profile from the engine selection window, the metadata server running on the cluster login node manufactures a Slurm job and submits it. IT RCI made some modifications to the /opt/shared/visit/<version>/<version>/bin/internallauncher script to customize this procedure:

- The job script is generated in the

/run/user/<uid#>directory rather than in/tmp; when a user has fully logged-out from a login node the per-user/rundirectory is automatically removed (taking those unnecessary job scripts with it) - The job script name follows the pattern

visit.<YYYY><MM><DD>T<HH><mm><SS>; the only possible issue would be for two remote engines to be started in the exact same second of time (not very likely) - If an IT RCI-managed version of Open MPI is present in the remote VisIt environment, the job script will source the templated environment setup scriptlet (

/opt/shared/slurm/templates/libexec/openmpi.sh) before executing the remote engine - The Slurm job output is directed to a file named

visit-remote-<jobid>.out

Once the remote job has been allocated resources and executed by Slurm, the connection progress box should disappear. At this point, the local VisIt will use the remote engine for any data processing, etc.

If a user sets the VISIT_LOG_COMMANDS environment variable to any non-empty value in his/her ~/.bashrc file, the "remote" visit wrapper will log the commands it is reissuing to the user's ~/visit.log file. This can be helpful when developing a new launch profile for one of the clusters.