Table of Contents

Orphaned QLOGIN Sessions

The Problem

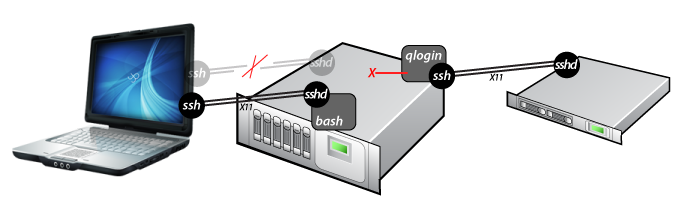

A user connects to a cluster head node using SSH. The user then opens an interactive session with a compute node by means of the qlogin command:

$ ssh mills.hpc ............................................................ Mills cluster (mills.hpc.udel.edu) This computer system is maintained by University of Delaware IT. Links to documentation and other online resources can be found at: http://docs.hpc.udel.edu/ For support, please contact consult@udel.edu ............................................................ [(it_css:traine)@mills it_css]$ qlogin Your job 69114 ("QLOGIN") has been submitted waiting for interactive job to be scheduled ... Your interactive job 69114 has been successfully scheduled. Establishing /opt/shared/GridEngine/local/qlogin_ssh session to host n010 ... [traine@n010 it_css]$

This is illustrated in the following cartoon:

While this qlogin session is active, the user's computer somehow becomes disconnected from the cluster head node. Some ways in which this can happen include:

- user accidentally closes the SSH window

- user puts his/her laptop to sleep

- the network infrastructure between the user and the cluster experiences issues (e.g. wireless access point goes down)

This severs the interactive session between the user's computer and the cluster head node. However, the qlogin session still exists between the cluster head node and compute node – its network connection has not been disrupted. Though the user cannot reestablish control of the qlogin session, the session will remain active indefinitely:

Orphaned qlogin sessions may be detrimental since they block queue slots and may consume system resources.

The Solution

UD IT has implemented a service that periodically checks for orphaned qlogin sessions. Any new orphaned sessions that are identified result in an email being sent to the user who owns the session:

To: traine@udel.edu Date: Fri, 24 Aug 2012 14:20:00 -0400 (EDT) Subject: [Mills] Orphaned QLOGIN sessions detected Reply-to: consult@udel.edu This is an automated message from the Mills cluster. You currently have 2 orphaned QLOGIN sessions running on the cluster head node. These QLOGIN sessions will be automatically killed 5 days from now to reclaim the queue slots they occupy. For more information on what an "orphaned QLOGIN session" means, please visit http://mills.hpc.udel.edu/wiki/technical/gridengine/qlogin-orphan You can dispose of these QLOGIN sessions yourself using the method outlined on the cited webpage and the following information: ssh pid GridEngine job information ---------- ------------------------------------------------------------ 27571 Job id: 62048 (shepherd pid: 32427 on n018) 23081 Job id: 64143 (shepherd pid: 3925 on n045) If you have an orphaned QLOGIN session running an active computational task and you do NOT want it to be killed automatically, reply to this message immediately. Be sure to identify the session's process identifier (pid). The message will be sent to the IT Support Center (consult@udel.edu).

Five days from the time this message was sent – in this example, any time after Wednesday, August 29, 2:20 p.m. – the two processes listed will be automatically killed and their resources reclaimed by Grid Engine. The user can also manually kill any of the orphaned sessions before five days passes using the kill command:

[(it_css:traine)@mills it_css]$ kill -9 27571 23081

What's Running in an Orphan?

Users who receive an email like the one above may not know what programs are running within each orphaned session. Ideally, when using qlogin to run a long computational task the -N flag can be used to set the job name to a short description of the task (versus the default name, QLOGIN):

[(it_css:traine)@mills it_css]$ qlogin -N 'Gaussian, C6H6 opt+freq'

Your job 75136 ("Gaussian, C6H6 opt+freq") has been submitted

:

[traine@n014 ~]$

Subsequent use of qstat will show the leading part of the assigned job name. The full name can be displayed using

[(it_css:traine)@mills it_css]$ qstat -j 75136 | grep job_name job_name: Gaussian, C6H6 opt+freq

to allow user to confirm the job's identity.

If the user neglected to leverage job naming, then the "shepherd pid" can be used on the compute node to display the processes associated with the orphan. Assume that the user received an email notification which listed an orphan with the GridEngine information: Job id: 75136 (shepherd pid: 3925 on n014). Logging in to n014 and using the pstree command on process 3925 shows all child processes associated with the shepherd:

[(it_css:traine)@mills it_css]$ ssh n014 pstree -a -p 3925

sge_shepherd,3925 -bg

`-sshd,3926

`-sshd,3927

`-bash,3929 /home/software/GridEngine/6.2u7/default/spool/n014/job_scripts/75136

`-g09,3930 c6h6-opt-freq.com

Thus, this particular orphan is running the g09 program with a single command line argument (c6h6-opt-freq.com).

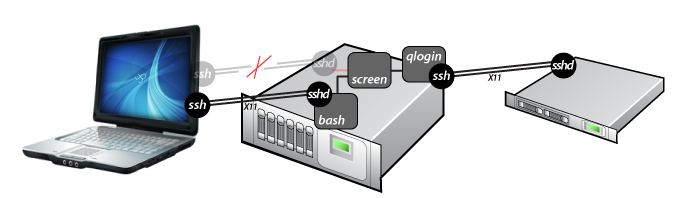

Mitigating Orphaning using Screen

Users who must run a lengthy computation task via qlogin should mitigate the chance of their session's being orphaned by issuing the qlogin command inside a screen process:

The screen command acts as the controlling terminal for the qlogin and its SSH session and allows the user to disconnect from and reconnect to them at will.

screen to protect your qlogin session and it contains an active computational task that you would prefer NOT be killed automatically, follow the directions in the last paragraph of the message and reply to it. IT will mark the sessions you indicate so that they are not killed automatically; be sure to manually kill each once computation has completed! If after two weeks any of the sessions are still active you will again be notified and must again request that the session not be killed, etc.

Implementation Details

Identifying Orphans

Orphaned qlogin sessions are identified by their child /usr/bin/ssh process with the following criteria:

- A parent process id (ppid) of 1

- The optional presence of the X11-tunneling arguments, "-X -Y"

- The presence of a "-p [0-9]+" argument

- A final argument of a compute node, "n[0-9]{3}"

All matching processes on the head node are deemed orphans. Each process has a unique identifier constructed for it using its vital statistics (in hexadecimal):

uid:gid:port:pid:pgrp:sessionid:starttime e.g. 49B:49B:132D:100A:1009:1008:5F43D0

The chances of two qlogin orphans having all seven data points in common is extremely low. Even should user X in group Y somehow manage to get the same TCP/IP port, pid, pgrp, and sessionid assigned to the ssh process, the starttime monotonically increases between subsequent qlogin invocations and should be unique. The starttime is relative to the system boot time, so it will roll over when the head node is rebooted – but rebooting the head node will kill all qlogin sessions, anyway.

Maintaining State

An SQLite3 database (/opt/etc/qlogin-orphan.sqlite3db) is constructed which holds the data for orphans in the following table:

CREATE TABLE orphans ( orphanId CHARACTER VARYING(64) UNIQUE NOT NULL, uid INTEGER NOT NULL, gid INTEGER NOT NULL, tcpIpPort INTEGER NOT NULL, pid INTEGER NOT NULL, pgrp INTEGER NOT NULL, sessionId INTEGER NOT NULL, startTime INTEGER NOT NULL, node CHARACTER(4), jobId TEXT, noAutoKill INTEGER DEFAULT 0, created TIMESTAMP DEFAULT CURRENT_TIMESTAMP, notified TIMESTAMP, killed TIMESTAMP );

- The

noAutoKillis set by IT-NSS iff the owner indicates that the orphan is actively computing and should not be killed. - The

notifiedtimestamp is set once the owner has been emailed to notify him/her that the process will be killed in 5 days. - The

killedtimestamp is set once thenotifiedtimestamp is greater-than 5 days old AND the process has been issued a kill signal - Tuples in the table are removed after 14 days; any orphan not killed after 14 days will thus re-enter the cycle.

A session is marked for no automatic removal by setting its noAutoKill field in the database, e.g.

sqlite> UPDATE orphans SET noAutoKill=1 WHERE orphanId = '49B:49B:132D:100A:1009:1008:5F43D0'